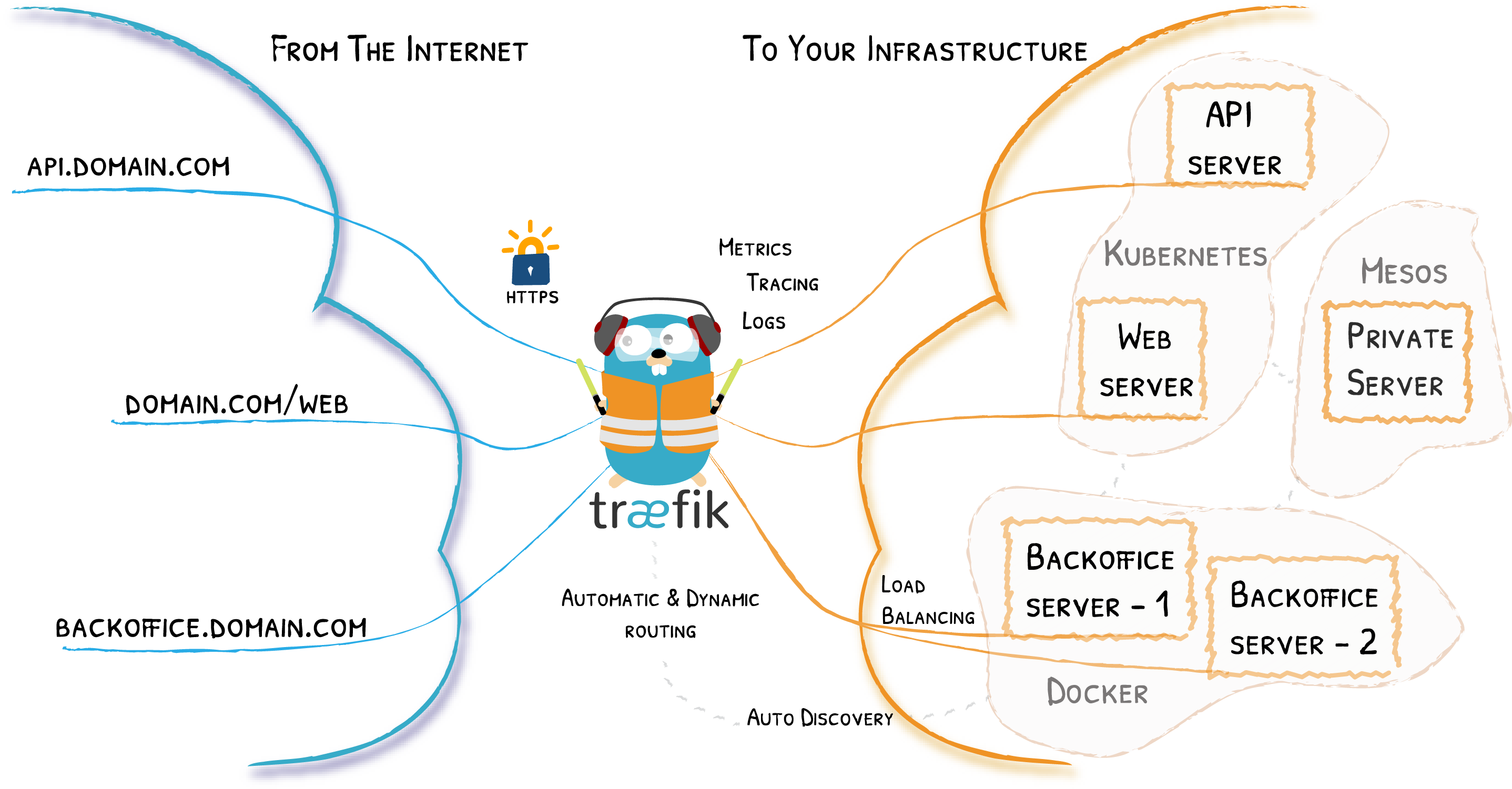

Traefik is a widely adopted open-source HTTP reverse proxy and load balancer that simplifies the routing and load balancing of requests for modern web applications. It boasts dynamic configuration capabilities and supports a multitude of providers, positioning itself as a versatile solution for orchestrating complex deployment scenarios. In this blog post, we will delve into the architecture of Traefik and dissect the key components of its source code to furnish a more nuanced understanding of its operational mechanics.

Traefik Architecture: A High-Level Overview

At its core, Traefik’s architecture is composed of several integral components that collaborate to facilitate dynamic routing and load balancing:

Static Configuration: These are foundational settings for Traefik, encompassing entry points, providers, and API access configurations. They can be specified via file, command-line arguments, or environment variables.

Dynamic Configuration: This pertains to the routing rules, services, and middlewares that are adaptable based on the state of the infrastructure. Traefik’s compatibility with a myriad of providers, such as Docker, Kubernetes, Consul Catalog, among others, underscores its dynamism.

Providers: Acting as the bridge between Traefik and service discovery mechanisms, providers are tasked with sourcing and conveying dynamic configuration to Traefik. Each provider is tailored to integrate with different technologies like Docker, Kubernetes, and Consul.

Entry Points: These designate the network interfaces (ports and protocols) where Traefik listens for inbound traffic.

Routers: Routers establish criteria for matching incoming requests by various parameters such as hostnames, paths, and headers. They are responsible for directing requests to the appropriate service.

Services: Services define the mechanisms through which Traefik communicates with backend systems that process the requests. These can be fine-tuned with distinct load balancing strategies and health checks.

Middlewares: As modular entities, middlewares can be attached to routers or services, enabling a range of functions including authentication, rate limiting, and request modification.

Plugins: Traefik can be extended with plugins, which augment its functionality. The plugin system empowers developers to contribute custom plugins.

The orchestration of these components is as follows:

- Traefik ingests the static configuration and begins monitoring the specified entry points.

- Providers perpetually scan the infrastructure for configuration changes, relaying them to Traefik via a dynamic configuration channel.

- Traefik processes the dynamic configuration, updating its internal constructs—routers, services, and middlewares—to reflect these changes.

- As requests come in, Traefik matches them against router rules and channels them to the designated service.

- Services apply their load balancing logic to distribute the request to a backend server.

- Middlewares may be invoked at various stages in the request/response lifecycle to perform their respective duties.

This dynamic architecture enables Traefik to nimbly adjust to alterations within the infrastructure, automating the routing configuration in alignment with the deployed services.

Source Code Analysis: Key Components

Now, let’s examine some of the pivotal components within Traefik’s source code:

1. Watcher and Listener:

The watcher and listener collaborate to detect and enact dynamic configuration changes. The watcher is in charge of incessantly surveying configuration sources and propelling updates to the listener. Subsequently, the listener validates these updates and integrates them with Traefik’s internal state.

Watcher:

pkg/server/configurationwatcher.go

// NewConfigurationWatcher creates a new ConfigurationWatcher.

func NewConfigurationWatcher(

routinesPool *safe.Pool,

pvd provider.Provider,

defaultEntryPoints []string,

requiredProvider string,

) *ConfigurationWatcher {

return &ConfigurationWatcher{

providerAggregator: pvd,

allProvidersConfigs: make(chan dynamic.Message, 100),

newConfigs: make(chan dynamic.Configurations),

routinesPool: routinesPool,

defaultEntryPoints: defaultEntryPoints,

requiredProvider: requiredProvider,

}

}

// Start the configuration watcher.

func (c *ConfigurationWatcher) Start() {

c.routinesPool.GoCtx(c.receiveConfigurations)

c.routinesPool.GoCtx(c.applyConfigurations)

c.startProviderAggregator()

}

// Stop the configuration watcher.

func (c *ConfigurationWatcher) Stop() {

close(c.allProvidersConfigs)

close(c.newConfigs)

}

// AddListener adds a new listener function used when new configuration is provided.

func (c *ConfigurationWatcher) AddListener(listener func(dynamic.Configuration)) {

if c.configurationListeners == nil {

c.configurationListeners = make([]func(dynamic.Configuration), 0)

}

c.configurationListeners = append(c.configurationListeners, listener)

}

func (c *ConfigurationWatcher) startProviderAggregator() {

log.Info().Msgf("Starting provider aggregator %T", c.providerAggregator)

safe.Go(func() {

err := c.providerAggregator.Provide(c.allProvidersConfigs, c.routinesPool)

if err != nil {

log.Error().Err(err).Msgf("Error starting provider aggregator %T", c.providerAggregator)

}

})

}

Listener:

cmd/traefik/traefik.go

// Server Transports

watcher.AddListener(func(conf dynamic.Configuration) {

roundTripperManager.Update(conf.HTTP.ServersTransports)

dialerManager.Update(conf.TCP.ServersTransports)

})

pkg/server/service/roundtripper.go

// RoundTripperManager handles roundtripper for the reverse proxy.

type RoundTripperManager struct {

rtLock sync.RWMutex

roundTrippers map[string]http.RoundTripper

configs map[string]*dynamic.ServersTransport

spiffeX509Source SpiffeX509Source

}

// Update updates the roundtrippers configurations.

func (r *RoundTripperManager) Update(newConfigs map[string]*dynamic.ServersTransport) {

r.rtLock.Lock()

defer r.rtLock.Unlock()

for configName, config := range r.configs {

newConfig, ok := newConfigs[configName]

if !ok {

delete(r.configs, configName)

delete(r.roundTrippers, configName)

continue

}

if reflect.DeepEqual(newConfig, config) {

continue

}

var err error

r.roundTrippers[configName], err = r.createRoundTripper(newConfig)

if err != nil {

log.Error().Err(err).Msgf("Could not configure HTTP Transport %s, fallback on default transport", configName)

r.roundTrippers[configName] = http.DefaultTransport

}

}

for newConfigName, newConfig := range newConfigs {

if _, ok := r.configs[newConfigName]; ok {

continue

}

var err error

r.roundTrippers[newConfigName], err = r.createRoundTripper(newConfig)

if err != nil {

log.Error().Err(err).Msgf("Could not configure HTTP Transport %s, fallback on default transport", newConfigName)

r.roundTrippers[newConfigName] = http.DefaultTransport

}

}

r.configs = newConfigs

}

2. Provider:

Providers are pivotal in Traefik’s architecture, interfacing with specific technologies to unearth and deliver configuration updates. The Provide function lies at the heart of the provider’s role, managing connection, discovery, and update processes.

Kubernetes Provider: Configuration Discovery

The Kubernetes provider within Traefik extracts configuration details from the Kubernetes API server, monitoring changes across Kubernetes resources like Ingress, IngressRoute, Service, and Middleware objects.

2.1. Kubernetes Client Initialization:

The provider initializes a client to connect with the Kubernetes API, utilizing this client to engage with the API and observe resource changes.

pkg/provider/kubernetes/crd/kubernetes.go

func (p *Provider) newK8sClient(ctx context.Context) (*clientWrapper, error) {

_, err := labels.Parse(p.LabelSelector)

if err != nil {

return nil, fmt.Errorf("invalid label selector: %q", p.LabelSelector)

}

log.Ctx(ctx).Info().Msgf("label selector is: %q", p.LabelSelector)

withEndpoint := ""

if p.Endpoint != "" {

withEndpoint = fmt.Sprintf(" with endpoint %s", p.Endpoint)

}

var client *clientWrapper

switch {

case os.Getenv("KUBERNETES_SERVICE_HOST") != "" && os.Getenv("KUBERNETES_SERVICE_PORT") != "":

log.Ctx(ctx).Info().Msgf("Creating in-cluster Provider client%s", withEndpoint)

client, err = newInClusterClient(p.Endpoint)

case os.Getenv("KUBECONFIG") != "":

log.Ctx(ctx).Info().Msgf("Creating cluster-external Provider client from KUBECONFIG %s", os.Getenv("KUBECONFIG"))

client, err = newExternalClusterClientFromFile(os.Getenv("KUBECONFIG"))

default:

log.Ctx(ctx).Info().Msgf("Creating cluster-external Provider client%s", withEndpoint)

client, err = newExternalClusterClient(p.Endpoint, p.CertAuthFilePath, p.Token)

}

if err != nil {

return nil, err

}

client.labelSelector = p.LabelSelector

return client, nil

}

2.2. Resource Watching:

The provider employs the Kubernetes client to observe changes in pertinent resources. Informers are typically utilized to efficiently track these resources and receive notifications upon creation, update, or deletion.

pkg/provider/kubernetes/crd/kubernetes.go

// Provide allows the k8s provider to provide configurations to traefik// using the given configuration channel.

func (p *Provider) Provide(configurationChan chan<- dynamic.Message, pool *safe.Pool) error {

logger := log.With().Str(logs.ProviderName, providerName).Logger()

ctxLog := logger.WithContext(context.Background())

k8sClient, err := p.newK8sClient(ctxLog)

if err != nil {

return err

}

if p.AllowCrossNamespace {

logger.Warn().Msg("Cross-namespace reference between IngressRoutes and resources is enabled, please ensure that this is expected (see AllowCrossNamespace option)")

}

if p.AllowExternalNameServices {

logger.Warn().Msg("ExternalName service loading is enabled, please ensure that this is expected (see AllowExternalNameServices option)")

}

pool.GoCtx(func(ctxPool context.Context) {

operation := func() error {

eventsChan, err := k8sClient.WatchAll(p.Namespaces, ctxPool.Done())

if err != nil {

logger.Error().Err(err).Msg("Error watching kubernetes events")

timer := time.NewTimer(1 * time.Second)

select {

case <-timer.C:

return err

case <-ctxPool.Done():

return nil

}

}

throttleDuration := time.Duration(p.ThrottleDuration)

throttledChan := throttleEvents(ctxLog, throttleDuration, pool, eventsChan)

if throttledChan != nil {

eventsChan = throttledChan

}

for {

select {

case <-ctxPool.Done():

return nil

case event := <-eventsChan:

// Note that event is the *first* event that came in during this throttling interval -- if we're hitting our throttle, we may have dropped events.

// This is fine, because we don't treat different event types differently.

// But if we do in the future, we'll need to track more information about the dropped events.

conf := p.loadConfigurationFromCRD(ctxLog, k8sClient)

confHash, err := hashstructure.Hash(conf, nil)

switch {

case err != nil:

logger.Error().Err(err).Msg("Unable to hash the configuration")

case p.lastConfiguration.Get() == confHash:

logger.Debug().Msgf("Skipping Kubernetes event kind %T", event)

default:

p.lastConfiguration.Set(confHash)

configurationChan <- dynamic.Message{

ProviderName: providerName,

Configuration: conf,

}

}

// If we're throttling,

// we sleep here for the throttle duration to enforce that we don't refresh faster than our throttle.

// time.Sleep returns immediately if p.ThrottleDuration is 0 (no throttle).

time.Sleep(throttleDuration)

}

}

}

notify := func(err error, time time.Duration) {

logger.Error().Err(err).Msgf("Provider error, retrying in %s", time)

}

err := backoff.RetryNotify(safe.OperationWithRecover(operation), backoff.WithContext(job.NewBackOff(backoff.NewExponentialBackOff()), ctxPool), notify)

if err != nil {

logger.Error().Err(err).Msg("Cannot retrieve data")

}

})

return nil

}

2.3. Configuration Parsing:

Upon receiving resource updates, the provider parses the data to extract configuration details. These configurations are then converted into Traefik-specific formats for routers, services, and middlewares.

pkg/provider/kubernetes/crd/kubernetes.go

func (p *Provider) loadConfigurationFromCRD(ctx context.Context, client Client) *dynamic.Configuration {

// ...

conf := &dynamic.Configuration{

// TODO: choose between mutating and returning tlsConfigs

HTTP: p.loadIngressRouteConfiguration(ctx, client, tlsConfigs),

TCP: p.loadIngressRouteTCPConfiguration(ctx, client, tlsConfigs),

UDP: p.loadIngressRouteUDPConfiguration(ctx, client),

TLS: &dynamic.TLSConfiguration{

Options: buildTLSOptions(ctx, client),

Stores: stores,

},

}

//...

conf.HTTP.Middlewares[id] = &dynamic.Middleware{

AddPrefix: middleware.Spec.AddPrefix,

StripPrefix: middleware.Spec.StripPrefix,

StripPrefixRegex: middleware.Spec.StripPrefixRegex,

ReplacePath: middleware.Spec.ReplacePath,

ReplacePathRegex: middleware.Spec.ReplacePathRegex,

Chain: createChainMiddleware(ctxMid, middleware.Namespace, middleware.Spec.Chain),

IPWhiteList: middleware.Spec.IPWhiteList,

IPAllowList: middleware.Spec.IPAllowList,

Headers: middleware.Spec.Headers,

Errors: errorPage,

RateLimit: rateLimit,

RedirectRegex: middleware.Spec.RedirectRegex,

RedirectScheme: middleware.Spec.RedirectScheme,

BasicAuth: basicAuth,

DigestAuth: digestAuth,

ForwardAuth: forwardAuth,

InFlightReq: middleware.Spec.InFlightReq,

Buffering: middleware.Spec.Buffering,

CircuitBreaker: circuitBreaker,

Compress: middleware.Spec.Compress,

PassTLSClientCert: middleware.Spec.PassTLSClientCert,

Retry: retry,

ContentType: middleware.Spec.ContentType,

GrpcWeb: middleware.Spec.GrpcWeb,

Plugin: plugin,

}

}

//...

cb := configBuilder{

client: client,

allowCrossNamespace: p.AllowCrossNamespace,

allowExternalNameServices: p.AllowExternalNameServices,

allowEmptyServices: p.AllowEmptyServices,

}

for _, service := range client.GetTraefikServices() {

err := cb.buildTraefikService(ctx, service, conf.HTTP.Services)

if err != nil {

log.Ctx(ctx).Error().Str(logs.ServiceName, service.Name).Err(err).

Msg("Error while building TraefikService")

continue

}

}

//...

id := provider.Normalize(makeID(serversTransport.Namespace, serversTransport.Name))

conf.HTTP.ServersTransports[id] = &dynamic.ServersTransport{

ServerName: serversTransport.Spec.ServerName,

InsecureSkipVerify: serversTransport.Spec.InsecureSkipVerify,

RootCAs: rootCAs,

Certificates: certs,

DisableHTTP2: serversTransport.Spec.DisableHTTP2,

MaxIdleConnsPerHost: serversTransport.Spec.MaxIdleConnsPerHost,

ForwardingTimeouts: forwardingTimeout,

PeerCertURI: serversTransport.Spec.PeerCertURI,

Spiffe: serversTransport.Spec.Spiffe,

}

}

// ...

for _, serversTransportTCP := range client.GetServersTransportTCPs() {

var tcpServerTransport dynamic.TCPServersTransport

tcpServerTransport.SetDefaults()

if serversTransportTCP.Spec.DialTimeout != nil {

// ...

}

if serversTransportTCP.Spec.DialKeepAlive != nil {

// ...

}

if serversTransportTCP.Spec.TerminationDelay != nil {

// ...

}

if serversTransportTCP.Spec.TLS != nil {

var rootCAs []types.FileOrContent

for _, secret := range serversTransportTCP.Spec.TLS.RootCAsSecrets {

caSecret, err := loadCASecret(serversTransportTCP.Namespace, secret, client)

if err != nil {

logger.Error().

Err(err).

Str("rootCAs", secret).

Msg("Error while loading rootCAs")

continue

}

rootCAs = append(rootCAs, types.FileOrContent(caSecret))

}

var certs tls.Certificates

for _, secret := range serversTransportTCP.Spec.TLS.CertificatesSecrets {

tlsCert, tlsKey, err := loadAuthTLSSecret(serversTransportTCP.Namespace, secret, client)

if err != nil {

logger.Error().

Err(err).

Str("certificates", secret).

Msg("Error while loading certificates")

continue

}

certs = append(certs, tls.Certificate{

CertFile: types.FileOrContent(tlsCert),

KeyFile: types.FileOrContent(tlsKey),

})

}

tcpServerTransport.TLS = &dynamic.TLSClientConfig{

ServerName: serversTransportTCP.Spec.TLS.ServerName,

InsecureSkipVerify: serversTransportTCP.Spec.TLS.InsecureSkipVerify,

RootCAs: rootCAs,

Certificates: certs,

PeerCertURI: serversTransportTCP.Spec.TLS.PeerCertURI,

}

tcpServerTransport.TLS.Spiffe = serversTransportTCP.Spec.TLS.Spiffe

}

id := provider.Normalize(makeID(serversTransportTCP.Namespace, serversTransportTCP.Name))

conf.TCP.ServersTransports[id] = &tcpServerTransport

}

}

2.4. Configuration Updates:

Providers transmit the Traefik-ready configurations to the watcher through configurationChan. The watcher then consolidates these updates and forwards them to the listener for application.

This process ensures that Traefik dynamically updates its routing rules and configurations to align with the evolving state of the Kubernetes cluster.

3. Switcher:

The essence of the switcher is the combination of sync.Map + interface{}, the purpose of which is to obtain and dynamically update the handler in a thread-safe manner.

pkg/safe/safe.go

// Safe encapsulates a thread-safe value.

type Safe struct {

value interface{}

lock sync.RWMutex

}

pkg/server/server_entrypoint_tcp.go

func createHTTPServer(ctx context.Context, ln net.Listener, configuration *static.EntryPoint, withH2c bool, reqDecorator *requestdecorator.RequestDecorator) (*httpServer, error) {

if configuration.HTTP2.MaxConcurrentStreams < 0 {

return nil, errors.New("max concurrent streams value must be greater than or equal to zero")

}

httpSwitcher := middlewares.NewHandlerSwitcher(router.BuildDefaultHTTPRouter())

next, err := alice.New(requestdecorator.WrapHandler(reqDecorator)).Then(httpSwitcher)

if err != nil {

return nil, err

}

pkg/middlewares/handler_switcher.go

// NewHandlerSwitcher builds a new instance of HTTPHandlerSwitcher.

func NewHandlerSwitcher(newHandler http.Handler) (hs *HTTPHandlerSwitcher) {

return &HTTPHandlerSwitcher{

handler: safe.New(newHandler),

}

}

func (h *HTTPHandlerSwitcher) ServeHTTP(rw http.ResponseWriter, req *http.Request) {

handlerBackup := h.handler.Get().(http.Handler)

handlerBackup.ServeHTTP(rw, req)

}

// GetHandler returns the current http.ServeMux.

func (h *HTTPHandlerSwitcher) GetHandler() (newHandler http.Handler) {

handler := h.handler.Get().(http.Handler)

return handler

}

// UpdateHandler safely updates the current http.ServeMux with a new one.func (h *HTTPHandlerSwitcher) UpdateHandler(newHandler http.Handler) {

h.handler.Set(newHandler)

}

Health Checks: Ensuring Availability

Traefik conducts health checks on both itself and backend services to guarantee that traffic is routed only to operational instances.

Self Health Check

Traefik implements self-health checks through the /ping endpoint. This endpoint can be invoked by external systems to ascertain Traefik’s health status.

// Do try to do a healthcheck.func Do(staticConfiguration static.Configuration) (*http.Response, error) {

if staticConfiguration.Ping == nil {

return nil, errors.New("please enable `ping` to use health check")

}

ep := staticConfiguration.Ping.EntryPoint

if ep == "" {

ep = "traefik"

}

pingEntryPoint, ok := staticConfiguration.EntryPoints[ep]

if !ok {

return nil, fmt.Errorf("ping: missing %s entry point", ep)

}

client := &http.Client{Timeout: 5 * time.Second}

protocol := "http"

path := "/"

return client.Head(protocol + "://" + pingEntryPoint.GetAddress() + path + "ping")

}

This endpoint returns a 200 OK status when Traefik is operational. A failed health check results in a non-200 status code, signaling an issue.

Backend Service Health Checks

Traefik supports health checks for backend services across HTTP, HTTPS, and gRPC protocols. Configurable health checks enable the assessment of backend server availability.

The following is an abridged version of how Traefik conducts backend service health checks:

- Configuration: Health check specifics are set in the service configuration, detailing parameters like the health check path, interval, and timeout.

- Health Checker: A ServiceHealthChecker is instantiated for each service with enabled health checks.

- Periodic Checks: The health checker periodically sends requests to backend servers based on the interval setting.

- Status Updates: The health checker updates each server’s status according to the health check response.

- Load Balancer Updates: The loader balancer is notified of any status changes, which it considers when distributing requests.

Here’s a snippet of the Go code for the ServiceHealthChecker:

pkg/healthcheck/healthcheck.go

func NewServiceHealthChecker(ctx context.Context, metrics metricsHealthCheck, config *dynamic.ServerHealthCheck, service StatusSetter, info *runtime.ServiceInfo, transport http.RoundTripper, targets map[string]*url.URL) *ServiceHealthChecker {

logger := log.Ctx(ctx)

interval := time.Duration(config.Interval)

if interval <= 0 {

logger.Error().Msg("Health check interval smaller than zero")

interval = time.Duration(dynamic.DefaultHealthCheckInterval)

}

timeout := time.Duration(config.Timeout)

if timeout <= 0 {

logger.Error().Msg("Health check timeout smaller than zero")

timeout = time.Duration(dynamic.DefaultHealthCheckTimeout)

}

client := &http.Client{

Transport: transport,

}

if config.FollowRedirects != nil && !*config.FollowRedirects {

client.CheckRedirect = func(req *http.Request, via []*http.Request) error {

return http.ErrUseLastResponse

}

}

return &ServiceHealthChecker{

balancer: service,

info: info,

config: config,

interval: interval,

timeout: timeout,

targets: targets,

client: client,

metrics: metrics,

}

}

func (shc *ServiceHealthChecker) Launch(ctx context.Context) {

ticker := time.NewTicker(shc.interval)

defer ticker.Stop()

for {

select {

case <-ctx.Done():

return

case <-ticker.C:

for proxyName, target := range shc.targets {

select {

case <-ctx.Done():

return

default:

}

up := true

serverUpMetricValue := float64(1)

if err := shc.executeHealthCheck(ctx, shc.config, target); err != nil {

// The context is canceled when the dynamic configuration is refreshed.

if errors.Is(err, context.Canceled) {

return

}

log.Ctx(ctx).Warn().

Str("targetURL", target.String()).

Err(err).

Msg("Health check failed.")

up = false

serverUpMetricValue = float64(0)

}

shc.balancer.SetStatus(ctx, proxyName, up)

statusStr := runtime.StatusDown

if up {

statusStr = runtime.StatusUp

}

shc.info.UpdateServerStatus(target.String(), statusStr)

shc.metrics.ServiceServerUpGauge().

With("service", proxyName, "url", target.String()).

Set(serverUpMetricValue)

}

}

}

}

func (shc *ServiceHealthChecker) executeHealthCheck(ctx context.Context, config *dynamic.ServerHealthCheck, target *url.URL) error {

ctx, cancel := context.WithDeadline(ctx, time.Now().Add(shc.timeout))

defer cancel()

if config.Mode == modeGRPC {

return shc.checkHealthGRPC(ctx, target)

}

return shc.checkHealthHTTP(ctx, target)

}

// checkHealthHTTP returns an error with a meaningful description if the health check failed.// Dedicated to HTTP servers.

func (shc *ServiceHealthChecker) checkHealthHTTP(ctx context.Context, target *url.URL) error {

req, err := shc.newRequest(ctx, target)

if err != nil {

return fmt.Errorf("create HTTP request: %w", err)

}

resp, err := shc.client.Do(req)

if err != nil {

return fmt.Errorf("HTTP request failed: %w", err)

}

defer resp.Body.Close()

if shc.config.Status == 0 && (resp.StatusCode < http.StatusOK || resp.StatusCode >= http.StatusBadRequest) {

return fmt.Errorf("received error status code: %v", resp.StatusCode)

}

if shc.config.Status != 0 && shc.config.Status != resp.StatusCode {

return fmt.Errorf("received error status code: %v expected status code: %v", resp.StatusCode, shc.config.Status)

}

return nil

}

This code illustrates the health checker dispatching a health check request and updating the server status based on the response. The load balancer then uses this information to direct traffic only to responsive servers.

Middleware: Enhancing the Routing Process

Traefik’s middleware system allows for the injection of additional functionality into the request/response lifecycle. Middlewares can be linked to routers or services to perform tasks such as authentication, rate limiting, and request transformation.

Traefik provides an extensive range of built-in middlewares, and custom middlewares can be integrated via plugins.

Middleware Processing Pipeline

The following outlines how Traefik processes middlewares:

- Configuration: Middlewares are defined in the dynamic configuration and linked to routers or services.

- Middleware Chain: A chain of middlewares is constructed based on the configuration, with the order determining their execution sequence.

- Request Processing: Incoming requests traverse the middleware chain before reaching the backend service, allowing each middleware to alter the request or generate a response.

- Response Processing: After the backend service responds, the response is pushed back through the middleware chain in reverse order, permitting modification before reaching the client.

Source Code Analysis: Middleware Builder

The middleware.Builder is responsible for constructing the middleware chain from the configuration. It provides a BuildChain function that accepts a context and a list of middleware names, returning an alice.Chain object.

Here’s an abridged version of the BuildChain function:

pkg/server/middleware/middlewares.go

func (b *Builder) BuildChain(ctx context.Context, middlewares []string) *alice.Chain {

chain := alice.New()

for _, name := range middlewares {

middlewareName := provider.GetQualifiedName(ctx, name)

chain = chain.Append(func(next http.Handler) (http.Handler, error) {

constructorContext := provider.AddInContext(ctx, middlewareName)

if midInf, ok := b.configs[middlewareName]; !ok || midInf.Middleware == nil {

return nil, fmt.Errorf("middleware %q does not exist", middlewareName)

}

var err error

if constructorContext, err = checkRecursion(constructorContext, middlewareName); err != nil {

b.configs[middlewareName].AddError(err, true)

return nil, err

}

constructor, err := b.buildConstructor(constructorContext, middlewareName)

if err != nil {

b.configs[middlewareName].AddError(err, true)

return nil, err

}

handler, err := constructor(next)

if err != nil {

b.configs[middlewareName].AddError(err, true)

return nil, err

}

return handler, nil

})

}

return &chain

}

func checkRecursion(ctx context.Context, middlewareName string) (context.Context, error) {

currentStack, ok := ctx.Value(middlewareStackKey).([]string)

if !ok {

currentStack = []string{}

}

if inSlice(middlewareName, currentStack) {

return ctx, fmt.Errorf("could not instantiate middleware %s: recursion detected in %s", middlewareName, strings.Join(append(currentStack, middlewareName), "->"))

}

return context.WithValue(ctx, middlewareStackKey, append(currentStack, middlewareName)), nil

}

// it is the responsibility of the caller to make sure that b.configs[middlewareName].Middleware exists.

func (b *Builder) buildConstructor(ctx context.Context, middlewareName string) (alice.Constructor, error) {

config := b.configs[middlewareName]

if config == nil || config.Middleware == nil {

return nil, fmt.Errorf("invalid middleware %q configuration", middlewareName)

}

var middleware alice.Constructor

badConf := errors.New("cannot create middleware: multi-types middleware not supported, consider declaring two different pieces of middleware instead")

...

// Chain

if config.Chain != nil {

if middleware != nil {

return nil, badConf

}

var qualifiedNames []string

for _, name := range config.Chain.Middlewares {

qualifiedNames = append(qualifiedNames, provider.GetQualifiedName(ctx, name))

}

config.Chain.Middlewares = qualifiedNames

middleware = func(next http.Handler) (http.Handler, error) {

return chain.New(ctx, next, *config.Chain, b, middlewareName)

}

}

...

if middleware == nil {

return nil, fmt.Errorf("invalid middleware %q configuration: invalid middleware type or middleware does not exist", middlewareName)

}

// The tracing middleware is a NOOP if tracing is not setup on the middleware chain.

// Hence, regarding internal resources' observability deactivation, // this would not enable tracing. return tracing.WrapMiddleware(ctx, middleware), nil

}

This function iterates through middleware names to build a chain of constructors. Each constructor is tasked with creating a middleware instance and integrating it with the next handler in the chain.

The buildConstructor function creates the constructor for a specific middleware based on its configuration, applying reflection to identify the middleware type and instantiate the relevant function.

This methodology enables Traefik to support a diverse array of middlewares with varying configurations, while promoting a well-organized and modular codebase.

Conclusion

Traefik’s architecture and source code exemplify a systematic and modular approach to managing static and dynamic configurations, provider integration, and health checks. Understanding these core principles and code elements empowers users to effectively harness Traefik for orchestrating complex deployments.