2. Abstractions at Different Levels

System Model

Programs in distributed systems:

- Run concurrently on independent nodes

- Communicate via network connections that may introduce uncertainty and message loss

- Have no shared memory or shared clock

The system model enumerates many assumptions related to specific system designs, covering the environment and facilities where the distributed system is implemented:

- What capabilities nodes have and how they fail

- How communication links operate and how they might fail

- Properties of the overall system, such as assumptions about time and order

A robust system model makes the weakest assumptions, while strong assumptions create system models that are easier to reason about.

Nodes in this Model

As hosts for computation and storage:

- Ability to execute programs

- Ability to store data into memory and stable persistent state

- Have a clock

Nodes execute deterministic algorithms.

Failure model: Crash-recovery rather than Byzantine fault tolerance (arbitrary errors).

Communication Links in this Model

Communication links connect nodes to each other and send messages in either direction.

The network is unreliable; messages are prone to delay and loss.

Network partition: The network is disconnected but nodes are alive.

Assumptions about Time and Order

Information travels at most at the speed of light.

If distances vary, the arrival time and order of messages between nodes may vary.

Synchronous System Model

Processes execute in lock-step; message transmission delay has a known upper bound; every process has an accurate clock.

Asynchronous System Model

No timing assumptions; processes execute at independent rates; message transmission delay implies no bound; useful clocks do not exist.

Consensus Problem

Consensus is reached when multiple nodes agree on some value.

- Agreement: Every correct node agrees on the same value.

- Integrity: Every correct process decides at most one value, and if it decides some value, then it must have been proposed by some process.

- Termination: All processes eventually reach a decision.

- Validity: If all correct processes propose the same value V, then all correct processes decide value V.

Two Impossible Results

FLP Impossibility

Assuming nodes can only fail by crashing; network is reliable, and typical timing assumptions of asynchronous system models hold: e.g., message delay has no bound.

In a minimal asynchronous model system where the network is reliable but nodes are allowed to fail (even just one), there is no deterministic consensus algorithm that can solve the consensus problem.

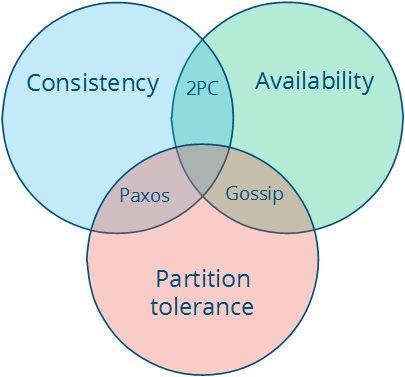

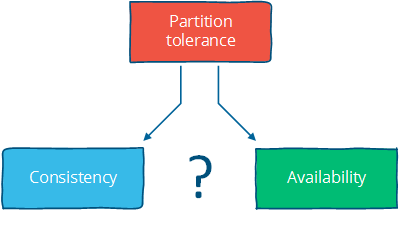

CAP Theorem

Three properties:

- (Strong) Consistency: All nodes see the same data at the same time.

- Availability: Node failures do not prevent survivors from continuing to operate.

- Partition Tolerance: The system continues to operate despite message loss due to network or node failure.

A system possessing all three properties simultaneously is impossible to achieve.

Three different system types:

- CA (Consistency + Availability): Fully strict quorum protocols, e.g., Two-Phase Commit.

- CP (Consistency + Partition Tolerance): Majority quorum protocols where a minority partition is unavailable, e.g., Paxos.

- AP (Availability + Partition Tolerance): Protocols using conflict resolution, e.g., Dynamo.

Consistency Model: A contract between the programmer and the system, where the system guarantees that if the programmer follows specific rules, the results of operations on the data store will be predictable.

- Strong Consistency Models (Able to maintain a single copy)

- Linearizable consistency

- Sequential consistency

- Weak Consistency

- Client-centric consistency models

- Causal consistency: strongest model available

- Eventual consistency models

Linearizable consistency requires that the order in which operations take effect equals the actual real-time order of operations. Sequential consistency allows operations to be reordered, as long as the order observed on every node remains consistent.